How's the second week of winter break going for you? How many times have you screamed at your kids? Fed them Christmas candy for breakfast? Confiscated your toddler's new set of horns, a gift from your relative with a grudge?

I've found a surefire way to cheer myself up whenever I feel exhausted and guilty at the end of a Bad Mommy day. No, I don't look through my kids' "You're the best mom in the world!" cards -- you do realize their teachers force them to write that. No, I cope by thinking about other women who are much worse mothers than I am. I'm talking celebrity moms. Moms who are impossibly rich and beautiful but can't be bothered to whip up some Kraft mac n' cheese. Moms like Britney Spears, who not only smokes around her kids, but also allows them to play with her cigarettes and lighter.

It's probably not fair of me to pick on poor Britney. If the paparazzi were tailing my family, they'd probably catch my kids setting the dog on fire. And I wouldn't look half as good in that bikini.

While Brit probably knows about smoking's ill effects on her own health, she may not be fully aware of the dangers of secondhand smoke, including increased risks of sudden infant death syndrome, ear infections, and asthma, not to mention a higher likelihood that her own children will smoke in the future. In fact, half of parents who smoke claim they have never been counseled by a pediatrician to stop.

Keeping your child healthy should be a powerful motivation to quit, but is this protective instinct enough to overcome the addiction? A meta-analysis published in Pediatrics this week found that programs that counsel parents on the dangers of secondhand smoke do increase the chances of quitting successfully, though the benefit was small: 23% in the intervention groups quit, compared to 18% in the control groups. Interestingly, parents of children over the age of 4 were more likely to quit with counseling, while those of younger children weren't. The authors speculated that despite the increased risk of SIDS, mothers with newborns may be less able to stop smoking during this particularly stressful time. Another possible reason is that as kids get older, parents may be more motivated to model healthy behaviors.

So for those of you still trying to quit, stick with it. Make a New Year's resolution. Ask your pediatrician to give you a pep talk at your child's next visit, and make an appointment with your own doctor to see if you should be prescribed any medication to help you quit. Don't be surprised if this is the hardest thing you've ever had to do. In fact, the tobacco companies are on to you -- there are more cigarette ads in January and February than any other month. If you relapse, don't beat yourself up. It takes the average smoker eight tries before she's able to quit for good.

If all else fails, just think of Britney.

Thursday, December 29, 2011

Wednesday, December 21, 2011

Meeting Mr. Sa (a.k.a. Frank Pus)

As a working mom, I accept that my toddler is going to be exposed to drool, snot and microscopic fecal contamination from his fellow daycare inmates. But I draw the line at pus. So imagine my dismay when one of my son's caregivers pulled me aside and said, "I get a lot of boils. Would you mind taking a look?" Whereupon she rolled up her shirt, revealing a lovely specimen, which fortunately had already burst and dried up. Most boils and abscesses are caused by Staphylococcus aureus, and in my area, about 60% are methicillin-resistant.

MRSA (along with some forms of strep) is commonly described as the "flesh-eating bacteria" in the media. While MRSA can result in serious, life-threatening infections, more often it causes nettlesome skin infections that may require incision and drainage and treatment with specific classes of antibiotics. The classic presentation is that of a "spider bite," sans spider.

It used to be that MRSA was seen primarily in hospitalized patients, but in the past 10 to 15 years, we've seen a meteoric rise in a particular strain in the community. MRSA is contagious, and pediatric outbreaks have been described in daycare centers and on sports teams.

So what can you do to protect your child? Unless you plan on raising a bubble boy or girl, MRSA is not entirely preventable. It's best to avoid sharing sweaty sports equipment and towels, which are often colonized. And there is another thing you can do to reduce the risk: Avoid unnecessary antibiotics. Antibiotics wipe out the good bacteria with the bad, allowing resistant strains to flourish. And many conditions frequently treated with antibiotics, such as ear infections, tend to resolve on their own anyway.

A recently published study looked at all the MRSA diagnoses in kids from 400 general practices in the U.K., and compared them to same-age controls. They then looked at the kids' exposure to antibiotics 1 to 6 months prior to the MRSA infection. Children who were infected with MRSA were three times as likely to have received antibiotics during that time period than those who weren't infected. The more antibiotics received, or the stronger the antibiotic (i.e., those with the broadest spectrum of activity), the stronger the association. Of course, it's possible that a child receiving multiple antibiotics is just more prone to infections, and the antibiotics per se are not causing the MRSA. The authors still found a correlation after controlling for baseline diseases, such as diabetes and asthma.

Here's another disturbing possibility: some of those kids might have actually picked up the MRSA from the doctors' office. Healthcare workers have colonization rates of up to 15%, and you know that most of us don't wash our hands after we pick our noses. Even worse, one study found that a third of stethoscopes in one ER were contaminated by MRSA. Since I work in a hospital, I could hardly blame my daycare if my son had become infected.

I admit I was caught flat-footed by this curbside consult, and I ended up advising my son's caregiver to see her own doctor. I told her a little about decolonization protocols, which involve bathing with antiseptics, taking antibiotics and lacing your nostrils with Bacitracin. Unfortunately, unless you place all your clothes, bedding and pets on a bonfire*, re-colonization is the norm, so these protocols aren't often used. Though I told her she had a bacterial infection, I avoided using the M-word in front of the other parents. I also didn't recommend staying home during her outbreaks, though I did suggest she cover up her boils with gauze. Afterwards I tried to hand off my kid to the other providers as discreetly as possible. This happened many years ago, and I still wonder whether I did the right thing.

What would you have done?

*Kidding! I don't want to be held responsible for any hamster roasts.

MRSA (along with some forms of strep) is commonly described as the "flesh-eating bacteria" in the media. While MRSA can result in serious, life-threatening infections, more often it causes nettlesome skin infections that may require incision and drainage and treatment with specific classes of antibiotics. The classic presentation is that of a "spider bite," sans spider.

Source: Dermatlas.org

It used to be that MRSA was seen primarily in hospitalized patients, but in the past 10 to 15 years, we've seen a meteoric rise in a particular strain in the community. MRSA is contagious, and pediatric outbreaks have been described in daycare centers and on sports teams.

So what can you do to protect your child? Unless you plan on raising a bubble boy or girl, MRSA is not entirely preventable. It's best to avoid sharing sweaty sports equipment and towels, which are often colonized. And there is another thing you can do to reduce the risk: Avoid unnecessary antibiotics. Antibiotics wipe out the good bacteria with the bad, allowing resistant strains to flourish. And many conditions frequently treated with antibiotics, such as ear infections, tend to resolve on their own anyway.

A recently published study looked at all the MRSA diagnoses in kids from 400 general practices in the U.K., and compared them to same-age controls. They then looked at the kids' exposure to antibiotics 1 to 6 months prior to the MRSA infection. Children who were infected with MRSA were three times as likely to have received antibiotics during that time period than those who weren't infected. The more antibiotics received, or the stronger the antibiotic (i.e., those with the broadest spectrum of activity), the stronger the association. Of course, it's possible that a child receiving multiple antibiotics is just more prone to infections, and the antibiotics per se are not causing the MRSA. The authors still found a correlation after controlling for baseline diseases, such as diabetes and asthma.

Here's another disturbing possibility: some of those kids might have actually picked up the MRSA from the doctors' office. Healthcare workers have colonization rates of up to 15%, and you know that most of us don't wash our hands after we pick our noses. Even worse, one study found that a third of stethoscopes in one ER were contaminated by MRSA. Since I work in a hospital, I could hardly blame my daycare if my son had become infected.

Bringing home the superbug

I admit I was caught flat-footed by this curbside consult, and I ended up advising my son's caregiver to see her own doctor. I told her a little about decolonization protocols, which involve bathing with antiseptics, taking antibiotics and lacing your nostrils with Bacitracin. Unfortunately, unless you place all your clothes, bedding and pets on a bonfire*, re-colonization is the norm, so these protocols aren't often used. Though I told her she had a bacterial infection, I avoided using the M-word in front of the other parents. I also didn't recommend staying home during her outbreaks, though I did suggest she cover up her boils with gauze. Afterwards I tried to hand off my kid to the other providers as discreetly as possible. This happened many years ago, and I still wonder whether I did the right thing.

What would you have done?

*Kidding! I don't want to be held responsible for any hamster roasts.

Tuesday, December 13, 2011

Cuckoo for Cocoa Puffs?

A visit to Jelly Belly heaven....

will I be paying for this later?

One of the enduring myths of Christmas (aside from the man with the bag) is that eating all those candy canes will make your kids go bonkers. There are certainly observational studies showing that sugar ingestion leads to inattention and impulsivity. But if you look at only the well-designed, double-blind, placebo-controlled studies (there have been at least a dozen), not one has found that sugar has a deleterious effect on behavior in kids, even in those with attention deficit-hyperactivity disorder (ADHD).

The definitive study was performed in normal preschoolers, as well as 6- to 10-year olds whose parents had identified as being "sugar sensitive." The care involved in the design of this trial was remarkable. A dietician supervised the removal of all the food from the home, except for coffee and alcohol "as long as they were not consumed by the children." The families were then provided with meals for the next 9 weeks. The experimental diets, which rotated every three weeks, included one that used sucrose (sugar) as a sweetener, one that used aspartame (Nutrasweet), and one that used saccharin. The families were not told the hypothesis, or what substitutions were made. In fact, the investigators created sham diets that changed every week, to throw the parents off the scent. One sham diet, for instance, consisted mainly of red and orange foods (although no artificial food coloring or additives were allowed). Every three weeks, children underwent tests of their memory, attention, motor skills, reading and math performance. Interviewers also surveyed parents and teachers about their kids' behavior.

The blinding was near perfect; only one parent correctly identified the sequence of diets. Even though 48 tests and surveys were conducted per child, almost none found a difference in cognition or behavior among the three diets. The one exception? Children on the sugar diet scored significantly better on the cognition portion of the Pediatric Behavior Scale. I'm tempted to use this as a post hoc justification for letting my kids eat Cap'n Crunch, but it's probably just a chance finding.

Nonetheless, some parents continue to insist that sweets make their kids hyper. Once you believe something, you're more likely to see it. In one study, thirty-five 5- to 7-year-old boys who were reported to be sensitive to sugar were randomized to two groups. In one group, the mothers were told their sons would receive a sugary drink; in the other group, they were told that they would receive a Nutrasweet drink. They then videotaped the boys playing by themselves and with their mom. The boys also wore an "actometer" on their wrists and ankles as an objective measure of their activity level.

Here's the twist: Both groups actually received Nutrasweet. As expected, there was no significant difference in the boys' activity levels by videotape review or actometer readings. But the mothers who thought their sons consumed sugar reported significantly more hyperactivity during the play session than those who knew they were drinking Nutrasweet. The videotape reviewers (who were blinded to the intervention) also found that the mothers who thought their boys drank sugar were more likely to hover around them, yet they scored lower in warmth and friendliness. It was kind of a mean study, if you think about it. First, they lied to the moms, then they slammed them for being more vigilant.

So why, despite the plethora of data to the contrary, has the sugar myth persisted? It's possible that something else in the sweets, such as food coloring or caffeine, causes hyperactivity. And think about it: When do we do let our kids consume copious amounts of sugar? On birthdays, Halloween, and Christmas -- all recipes for going a little nuts.

Thursday, December 8, 2011

Now With More Protein!

My kids' favorite cereal is Cap'n Crunch Crunch Berries. Hey, I'm a health-minded mom; I make certain that they get a serving of fruit every morning. Sure, Crunch Berries might not have the same anti-oxidant, cancer-fighting properties of acai berries. (Amazonian natives don't get cancer, so it must be true!) But I sure ain't gonna feed 'em the fruitless stuff.

They were rooting around in the pantry for their fix when they came across this old cereal container:

I tried to pass off the larvae* as a science experiment/proof of spontaneous generation/Christmas surprise, but my kids would have none of it. I was tempted to call the Captain himself to complain about the inaccurate nutrition labelling, when my husband discovered another little squirmer in a half-opened box of Cinnamon Life. Somehow finding one larva in your cereal is way more disturbing than finding a colony of them.

No doubt some of you are more disgusted that I let my kids eat sugary cereal than by the fact that my pantry is an insect zoo. And indeed, you would be in the right. There are no studies examining the larval content of sugary cereals, but there is a study showing that there is (brace yourself) sugar in sugary cereal.

The Environmental Working Group released a report this week on the sugar content of 84 popular breakfast cereals. Only one in four met the U.S. government's guideline of having less than 26% added sugar by weight. The worst was Kellogg's Honey Smacks, followed closely by Post Golden Crisps (formerly known as Sugar Smacks; there used to be truth in advertising). Cap'n Crunch Crunch Berries came in 9th, with 42% added sugar. A cup has 11 grams of sugar, which is less than a Twinkie but more than two Oreos. You can imagine how upset I was when I read that. I've since reformed my ways, and this is what I now serve my kids in the morning:

Chocolate's an anti-oxidant, isn't it?

*They weren't maggots; maggots eat meat, not fake berries. I have no idea what these larvae would have metamorphed into. (Any entomologists among my readers?) We sprayed them with Raid, squished them, burned them, and scattered their ashes in a lovely forest glen.

They were rooting around in the pantry for their fix when they came across this old cereal container:

I tried to pass off the larvae* as a science experiment/proof of spontaneous generation/Christmas surprise, but my kids would have none of it. I was tempted to call the Captain himself to complain about the inaccurate nutrition labelling, when my husband discovered another little squirmer in a half-opened box of Cinnamon Life. Somehow finding one larva in your cereal is way more disturbing than finding a colony of them.

No doubt some of you are more disgusted that I let my kids eat sugary cereal than by the fact that my pantry is an insect zoo. And indeed, you would be in the right. There are no studies examining the larval content of sugary cereals, but there is a study showing that there is (brace yourself) sugar in sugary cereal.

The Environmental Working Group released a report this week on the sugar content of 84 popular breakfast cereals. Only one in four met the U.S. government's guideline of having less than 26% added sugar by weight. The worst was Kellogg's Honey Smacks, followed closely by Post Golden Crisps (formerly known as Sugar Smacks; there used to be truth in advertising). Cap'n Crunch Crunch Berries came in 9th, with 42% added sugar. A cup has 11 grams of sugar, which is less than a Twinkie but more than two Oreos. You can imagine how upset I was when I read that. I've since reformed my ways, and this is what I now serve my kids in the morning:

Chocolate's an anti-oxidant, isn't it?

*They weren't maggots; maggots eat meat, not fake berries. I have no idea what these larvae would have metamorphed into. (Any entomologists among my readers?) We sprayed them with Raid, squished them, burned them, and scattered their ashes in a lovely forest glen.

Tuesday, December 6, 2011

Vaccinonomics

Warning: This is one of my wonkier postings. Read on if you'd like to learn more about the supposed science of economic analysis, and how it shapes healthcare policy.

I don't have a compelling personal anecdote about meningitis, and I hope I never do. Meningococcus is one of the more common causes of meningitis, and this bug gives even hardened doctors and nurses the heebie-jeebies. For one thing, it spreads by close contact, so members of the same household, or healthcare workers exposed to secretions, must take antibiotics to ward off the same fate. And if you don't die from meningococcus, you could end up with brain damage or multiple limb amputations, since one of the complications is gangrene.

So we should be thrilled that there's a vaccine against the most common serotypes that cause disease in adolescents and young adults, who are particularly susceptible to this infection. In the past, a single dose at age 11 or 12 was thought to be protective for 10 years, but recent studies have found that immunity lasts for only five. Last week, the American Academy of Pediatrics issued a statement recommending a second, booster dose for 16-year-olds.

No one argues that adding a booster won't save lives. But is it worth the extra cost, given that meningococcus is still a relatively uncommon disease? Already, kids routinely receive about 30 shots in their childhood -- double the number back in 1980. An editorial in the New England Journal of Medicine argued that "routine adolescent [meningococcal vaccine] does not provide good value for money, largely because of low disease incidence rates and relatively high vaccine cost."

You might argue that you can't put a price on a human life, but it turns out you can. Economic analysis is the science of quantifying the cost of healthcare interventions, but as you'll see, there are a lot of smoke and mirrors involved.

Let's start off with the basics. One way to measure the cost-effectiveness of a vaccine (or a pill, or seatbelts, or virtually anything) is to express it in dollars per life-year saved. You can see right away that if you had a vaccine that was equally effective across all age groups, it is cheaper to save the life of a baby than the life of a 70-year-old, since you could potentially add 80 years to the baby's life, but only 10 years to Grandpa's. It doesn't mean the baby's life is worth more, only that the vaccine is a bargain when given in infancy.

Some illnesses rarely cause death, but there may still be value in preventing them, to avoid complications, hospitalizations or lost productivity. So most economists use the measure of dollars per quality-adjusted life-year, or QALY, saved. How do economists quantify quality? Simple: They ask patients, "If 1 is the value of a perfectly healthy life, and 0 is death, how would you rate having this condition?" Suffering through a cold might be 0.999, while being hooked up to a ventilator and feeding tube might be 0.1. (There are no negative numbers in quality-of-life estimates, though there are probably some fates worse than death.) Already, you can see one of the inherent problems with economic analysis -- quality is an extremely subjective measure.

The other methodologic difficulties with this type of research involve knowing what to include in the accounting of costs and benefits, and which estimates to use. Do you analyze the economics from the individual's standpoint, or society's, or the third-party payer's? Each analysis is specific to its country; you can't take an analysis from, say, Singapore, and apply it in the U.K. Although our body of scientific knowledge is constantly changing, economic analyses become rapidly outdated as costs fluctuate. Econ analysis for vaccines is especially tricky, since you have to take herd immunity into account. In other words, the benefits of immunization may extend beyond the immunized.

One popular myth is that a "cost-effective" intervention saves money. In fact, most modern prevention and treatment measures don't save money at all. The biggest exception? Almost all routine early childhood immunizations, such as the measles and polio vaccines, save money. The same isn't true, though, for the newer vaccines targeting tweens and teens. Why is that?

Well, for one thing, adolescents are a hardy group. Their immune system is stronger than infants', and when they do die, it's often a result of their own stupidity -- think of texting while driving. On top of that, there's no loss in productivity when they're sick. (Insert your own lazy teenager joke here.) An adult takes time off from work for illness, and a parent needs to stay home with a sick toddler, but a jobless16-year-old with the flu can fend for himself. And then there's the fact that the newer vaccines aimed towards this age group are a lot more expensive than the older ones. So let's look at the cost-effectiveness of some of these vaccines in the U.S.:

Meningococcus is one of the more expensive, with $88,000 per QALY saved. Giving the double dose ends up being about the same price, since even though you double the cost, you save more lives.

Annual influenza vaccine in 12- to 17-year olds is very pricey, at $119,000 per QALY. Compare this to only $11,000 per QALY in 6 to 23-month olds.

Human papillomavirus virus (HPV) wasn't too bad, at $15,000 to $24,000 per QALY, although it's much more expensive to vaccinate boys than girls, since cervical cancer is more common than penile or anal cancer, and reducing HPV in girls should reduce the frequency of screening and treatment of pre-cancerous lesions.

Hepatitis A ranged from cost-saving in college freshmen to $40,000 per QALY in 15-year-olds. (The wide range should clue you in to the fragility of these economic models.)

The cheapest vaccine? Pertussis booster, at the bargain basement price of $6,300 per life-year saved. Outbreaks of pertussis, or whooping cough, have been linked to waning immunity in adolescents and adults, and while whooping cough is not particularly dangerous to older kids, it's very contagious and can kill unimmunized newborns. Much of its cost-effectiveness derives from herd immunity and the fact that pertussis is an older, cheaper vaccine. Middle school students in California are now required to get the pertussis booster.

Of course, these numbers give the illusion of hardness to a science that's based on the softest of data. And what is the definition of a "cost-effective" intervention anyway? By convention, a maximum limit of $50,000 per QALY saved is considered cost-effective. There's no logical reason why this number appears in the literature. It hasn't budged in the past two decades, despite inflation. And $50,000 may be a year's salary for one family, or the price of a car for another. But that's the figure in the minds of policy makers when they try to decide whether a new treatment should be covered by insurance.

Here's another way of looking at the numbers: The NEJM editorial laments that the public-sector cost of immunizing one child until adulthood (not including annual flu vaccines) is about $1,450 for males and $1,800 for females. I was surprised to see that this number was so low. After all, we spend much more than that educating and clothing our children. Heck, $100 a year is less than my caffeine budget. Shouldn't we be spending at least that much to keep our kids healthy?

*For you microbiologists out there, this is technically gonorrhea -- but it's in the same family of bacteria. Giant Microbes apparently found there's a bigger market for an STD than for meningitis.

How much would you pay to keep this little critter away from your child?*

I don't have a compelling personal anecdote about meningitis, and I hope I never do. Meningococcus is one of the more common causes of meningitis, and this bug gives even hardened doctors and nurses the heebie-jeebies. For one thing, it spreads by close contact, so members of the same household, or healthcare workers exposed to secretions, must take antibiotics to ward off the same fate. And if you don't die from meningococcus, you could end up with brain damage or multiple limb amputations, since one of the complications is gangrene.

So we should be thrilled that there's a vaccine against the most common serotypes that cause disease in adolescents and young adults, who are particularly susceptible to this infection. In the past, a single dose at age 11 or 12 was thought to be protective for 10 years, but recent studies have found that immunity lasts for only five. Last week, the American Academy of Pediatrics issued a statement recommending a second, booster dose for 16-year-olds.

No one argues that adding a booster won't save lives. But is it worth the extra cost, given that meningococcus is still a relatively uncommon disease? Already, kids routinely receive about 30 shots in their childhood -- double the number back in 1980. An editorial in the New England Journal of Medicine argued that "routine adolescent [meningococcal vaccine] does not provide good value for money, largely because of low disease incidence rates and relatively high vaccine cost."

You might argue that you can't put a price on a human life, but it turns out you can. Economic analysis is the science of quantifying the cost of healthcare interventions, but as you'll see, there are a lot of smoke and mirrors involved.

Let's start off with the basics. One way to measure the cost-effectiveness of a vaccine (or a pill, or seatbelts, or virtually anything) is to express it in dollars per life-year saved. You can see right away that if you had a vaccine that was equally effective across all age groups, it is cheaper to save the life of a baby than the life of a 70-year-old, since you could potentially add 80 years to the baby's life, but only 10 years to Grandpa's. It doesn't mean the baby's life is worth more, only that the vaccine is a bargain when given in infancy.

Some illnesses rarely cause death, but there may still be value in preventing them, to avoid complications, hospitalizations or lost productivity. So most economists use the measure of dollars per quality-adjusted life-year, or QALY, saved. How do economists quantify quality? Simple: They ask patients, "If 1 is the value of a perfectly healthy life, and 0 is death, how would you rate having this condition?" Suffering through a cold might be 0.999, while being hooked up to a ventilator and feeding tube might be 0.1. (There are no negative numbers in quality-of-life estimates, though there are probably some fates worse than death.) Already, you can see one of the inherent problems with economic analysis -- quality is an extremely subjective measure.

The other methodologic difficulties with this type of research involve knowing what to include in the accounting of costs and benefits, and which estimates to use. Do you analyze the economics from the individual's standpoint, or society's, or the third-party payer's? Each analysis is specific to its country; you can't take an analysis from, say, Singapore, and apply it in the U.K. Although our body of scientific knowledge is constantly changing, economic analyses become rapidly outdated as costs fluctuate. Econ analysis for vaccines is especially tricky, since you have to take herd immunity into account. In other words, the benefits of immunization may extend beyond the immunized.

One popular myth is that a "cost-effective" intervention saves money. In fact, most modern prevention and treatment measures don't save money at all. The biggest exception? Almost all routine early childhood immunizations, such as the measles and polio vaccines, save money. The same isn't true, though, for the newer vaccines targeting tweens and teens. Why is that?

Well, for one thing, adolescents are a hardy group. Their immune system is stronger than infants', and when they do die, it's often a result of their own stupidity -- think of texting while driving. On top of that, there's no loss in productivity when they're sick. (Insert your own lazy teenager joke here.) An adult takes time off from work for illness, and a parent needs to stay home with a sick toddler, but a jobless16-year-old with the flu can fend for himself. And then there's the fact that the newer vaccines aimed towards this age group are a lot more expensive than the older ones. So let's look at the cost-effectiveness of some of these vaccines in the U.S.:

Meningococcus is one of the more expensive, with $88,000 per QALY saved. Giving the double dose ends up being about the same price, since even though you double the cost, you save more lives.

Annual influenza vaccine in 12- to 17-year olds is very pricey, at $119,000 per QALY. Compare this to only $11,000 per QALY in 6 to 23-month olds.

Human papillomavirus virus (HPV) wasn't too bad, at $15,000 to $24,000 per QALY, although it's much more expensive to vaccinate boys than girls, since cervical cancer is more common than penile or anal cancer, and reducing HPV in girls should reduce the frequency of screening and treatment of pre-cancerous lesions.

Hepatitis A ranged from cost-saving in college freshmen to $40,000 per QALY in 15-year-olds. (The wide range should clue you in to the fragility of these economic models.)

The cheapest vaccine? Pertussis booster, at the bargain basement price of $6,300 per life-year saved. Outbreaks of pertussis, or whooping cough, have been linked to waning immunity in adolescents and adults, and while whooping cough is not particularly dangerous to older kids, it's very contagious and can kill unimmunized newborns. Much of its cost-effectiveness derives from herd immunity and the fact that pertussis is an older, cheaper vaccine. Middle school students in California are now required to get the pertussis booster.

Of course, these numbers give the illusion of hardness to a science that's based on the softest of data. And what is the definition of a "cost-effective" intervention anyway? By convention, a maximum limit of $50,000 per QALY saved is considered cost-effective. There's no logical reason why this number appears in the literature. It hasn't budged in the past two decades, despite inflation. And $50,000 may be a year's salary for one family, or the price of a car for another. But that's the figure in the minds of policy makers when they try to decide whether a new treatment should be covered by insurance.

Here's another way of looking at the numbers: The NEJM editorial laments that the public-sector cost of immunizing one child until adulthood (not including annual flu vaccines) is about $1,450 for males and $1,800 for females. I was surprised to see that this number was so low. After all, we spend much more than that educating and clothing our children. Heck, $100 a year is less than my caffeine budget. Shouldn't we be spending at least that much to keep our kids healthy?

*For you microbiologists out there, this is technically gonorrhea -- but it's in the same family of bacteria. Giant Microbes apparently found there's a bigger market for an STD than for meningitis.

Tuesday, November 29, 2011

All I Want for Christmas Is....Everything

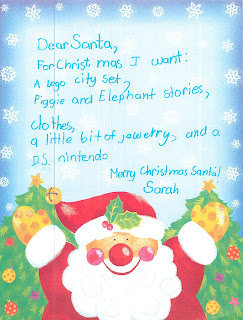

My kids wrote their letters to Santa today. There was no pussyfooting, no sucking up. They cut right to the chase. Here's my 8-year-old's letter:

And my 6-year-old's:

And my 19-month-old's, courtesy of his older sister:

I think they're trying to tell me something.

It's easy to assign the lion's share of blame for the commercialization of Christmas; all you have to do is reach for the remote. There's no question that T.V. advertising triggers the whiny demands. Every published study on this topic has noted a correlation between T.V. viewing frequency and purchase requests for food and toys in children. No surprise here -- why would companies spend billions of dollars producing commercials if they didn't work?

My favorite studies were conducted in the U.K., analyzing the content of letters to Father Christmas (Santa, to us Yanks) and surveying children and their parents about their viewing habits. The investigators also reviewed toy commercials on children's networks for the 6 weeks leading up to the holiday season. One of the more remarkable findings was that toy ads ran an average of 33 times an hour -- and this figure doesn't even include ads for food or other shows. The first study, conducted in 3- to 6-year olds (who were allowed to dictate or draw their letters), found that the number of items requested went up with the amount of T.V. viewing. There was no associated increase in the request for advertised brands, probably because the children were too young to recall specific names. The study was then repeated in 6- to 8-year-olds, and some interesting trends emerged. For one thing, the kids got greedier, with the average number of demands increasing from 3 to 5 items. (I was relieved to discover my kids are no more spoiled than the average brat.) For another, the more T.V. a kid watched, the more advertised brands he or she would request. Girls were more susceptible to brand-name recognition (with Bratz dolls being the most popular), though peer pressure may also have played a role, as the letters were written in a classroom setting.

Dealing with the deluge of requests around Christmas is annoying, but can advertising actually impact the moral development of children? Five studies have looked at whether T.V. viewing is associated with materialism. Greed was measured by asking children to agree or disagree with statements such as "Money can buy happiness" or "My dream in life is to be able to own expensive things," or by having them choose between toys or friends. Four out of the five studies found significant correlations between the amount of T.V. watched and materialistic values.

So what's the best way to grapple with the gimmes? The "duh" answer is to ban the kiddos from watching any T.V., at least from November onward, but most parents aren't willing to go to such extremes. You could outlaw advertising aimed towards children, which is what Sweden does* -- but we all know that industry would never allow that to happen in the States.

Believe it or not, a randomized, control trial exists to answer this question. In this study, third- and fourth-graders in one school were randomized to a media reduction program, while kids at another school served as the control. The kids in the intervention group received 18 sermons on reducing T.V., videotape and video game use, after which they were challenged to go media-free for 10 days. Incredibly, two-thirds of the kids succeeded with this challenge. The parents were then given an electronic T.V. time manager that restricted usage to 7 hours a week. Only 40% complied with this portion, possibly because Dad (or, not to be sexist, Mom) wasn't willing to give up his ESPN. The kids and parents were asked at the beginning and end of the study about the number of requests for toys seen on T.V. in the previous week. While there were no differences in toy requests at the beginning of the study, by the end, the kids in the intervention group had reduced their number of demands by 70%.

While it's unlikely that schools will implement this intensive curriculum, most parents could aim for the 7 hours a week T.V. budget. I love the idea of installing a T.V. time manager, since kids know that it's useless arguing with a computer. Another strategy is to watch T.V. with your kids and talk to them about the commercials. Over half of 6- to 8-year-olds in the Santa letters study couldn't explain what an advertisement was. Studies have shown that talking to your kids about commercials mitigates their insidious influence.

The kids don't know it yet, but maybe Santa will surprise them with a T.V. time manager. That's what happens when you try to shake down the big guy. In the meantime, anyone know where I can buy some fake drool?**

*In fact, in a small substudy, Swedish children requested fewer items in their Father Christmas letters than did children from the U.K.

**My daughter's explanation: "It's just like fake vomit -- you can use it to gross people out!" Not sure why JoJo would need any, since he produces copious amounts of the real stuff.

And my 6-year-old's:

And my 19-month-old's, courtesy of his older sister:

I think they're trying to tell me something.

It's easy to assign the lion's share of blame for the commercialization of Christmas; all you have to do is reach for the remote. There's no question that T.V. advertising triggers the whiny demands. Every published study on this topic has noted a correlation between T.V. viewing frequency and purchase requests for food and toys in children. No surprise here -- why would companies spend billions of dollars producing commercials if they didn't work?

My favorite studies were conducted in the U.K., analyzing the content of letters to Father Christmas (Santa, to us Yanks) and surveying children and their parents about their viewing habits. The investigators also reviewed toy commercials on children's networks for the 6 weeks leading up to the holiday season. One of the more remarkable findings was that toy ads ran an average of 33 times an hour -- and this figure doesn't even include ads for food or other shows. The first study, conducted in 3- to 6-year olds (who were allowed to dictate or draw their letters), found that the number of items requested went up with the amount of T.V. viewing. There was no associated increase in the request for advertised brands, probably because the children were too young to recall specific names. The study was then repeated in 6- to 8-year-olds, and some interesting trends emerged. For one thing, the kids got greedier, with the average number of demands increasing from 3 to 5 items. (I was relieved to discover my kids are no more spoiled than the average brat.) For another, the more T.V. a kid watched, the more advertised brands he or she would request. Girls were more susceptible to brand-name recognition (with Bratz dolls being the most popular), though peer pressure may also have played a role, as the letters were written in a classroom setting.

Dealing with the deluge of requests around Christmas is annoying, but can advertising actually impact the moral development of children? Five studies have looked at whether T.V. viewing is associated with materialism. Greed was measured by asking children to agree or disagree with statements such as "Money can buy happiness" or "My dream in life is to be able to own expensive things," or by having them choose between toys or friends. Four out of the five studies found significant correlations between the amount of T.V. watched and materialistic values.

So what's the best way to grapple with the gimmes? The "duh" answer is to ban the kiddos from watching any T.V., at least from November onward, but most parents aren't willing to go to such extremes. You could outlaw advertising aimed towards children, which is what Sweden does* -- but we all know that industry would never allow that to happen in the States.

Believe it or not, a randomized, control trial exists to answer this question. In this study, third- and fourth-graders in one school were randomized to a media reduction program, while kids at another school served as the control. The kids in the intervention group received 18 sermons on reducing T.V., videotape and video game use, after which they were challenged to go media-free for 10 days. Incredibly, two-thirds of the kids succeeded with this challenge. The parents were then given an electronic T.V. time manager that restricted usage to 7 hours a week. Only 40% complied with this portion, possibly because Dad (or, not to be sexist, Mom) wasn't willing to give up his ESPN. The kids and parents were asked at the beginning and end of the study about the number of requests for toys seen on T.V. in the previous week. While there were no differences in toy requests at the beginning of the study, by the end, the kids in the intervention group had reduced their number of demands by 70%.

While it's unlikely that schools will implement this intensive curriculum, most parents could aim for the 7 hours a week T.V. budget. I love the idea of installing a T.V. time manager, since kids know that it's useless arguing with a computer. Another strategy is to watch T.V. with your kids and talk to them about the commercials. Over half of 6- to 8-year-olds in the Santa letters study couldn't explain what an advertisement was. Studies have shown that talking to your kids about commercials mitigates their insidious influence.

The kids don't know it yet, but maybe Santa will surprise them with a T.V. time manager. That's what happens when you try to shake down the big guy. In the meantime, anyone know where I can buy some fake drool?**

*In fact, in a small substudy, Swedish children requested fewer items in their Father Christmas letters than did children from the U.K.

**My daughter's explanation: "It's just like fake vomit -- you can use it to gross people out!" Not sure why JoJo would need any, since he produces copious amounts of the real stuff.

Tuesday, November 22, 2011

Flying the Fussy Skies

Carseat, check. Breastpump, check. Diaper bag, check.

Big brother, check. Baby...baby??

Three and a half million U.S. travelers are expected to fly over the Thanksgiving holiday this year; and about 1% of passengers are children under the age of 2. That's a whole lotta caterwauling at 25,000 feet. Few things can be as stressful as flying with young children. Here are the answers to some common questions about traveling with kids:

Should I pay for an extra ticket, so my child can sit in her carseat? The FAA has long considered a proposal to require carseats for children under two. Thankfully, they haven't mandated this rule, and here's why: a carseat is highly unlikely to save the life of your kid. That's because plane crashes are extremely rare, and of those, 30% aren't survivable. Even extreme turbulence resulting in serious injury is uncommon. One analysis found that requiring a carseat for every child under two would save 0.4 lives a year in the U.S. I'm not sure, but I think you need at least 50% of your body to survive! They estimated that the additional cost of saving one life would be $6.4 million per each dollar cost per round trip. With the average price of a domestic ticket being $360, that's $2.3 billion dollars to save one life. Now, my kid may be worth that much, but yours isn't -- and I'm sure you'd say the same to me. Not only that, but some families will be deterred by the cost of the extra ticket and end up driving several hundred miles, which is significantly more dangerous than flying. The study concluded that because of this expected shift in air to land travel, requiring carseats on planes will end up killing more children than it saves. That said, bring the carseat along, just in case you win the lottery and find yourself next to an empty seat.

How can I prevent ear pain? Ear pain is greatest on ascent and descent, as cabin pressure drops and then increases. The pressure lags behind in the middle ear, leading to an changes in the volume of air in the ear, causing discomfort. If the Eustachian tubes leading to your middle ear are open, pressure equalizes quickly, relieving pain. Children have smaller Eustachian tubes that often clamp down with viral infections and allergies, so they're more susceptible to pain.

Swallowing helps open the Eustachian tubes, so you can try nursing or bottle feeding your baby, or having your older kids chew gum. The decongestant pseudoephedrine has been shown in randomized, controlled trials to reduce ear pain in adult air travelers. Unfortunately, a small study performed in children under the age of 6 found no reduction in ear pain with ascent or descent.

If your kid has a history of ear pain with flying, you could consider giving an over-the-counter analgesic 30 minutes prior to descent, when the pain is worst. There aren't any studies looking specifically at prevention or treatment of barotrauma ("baro" = pressure), but if I had to choose a medicine, I'd go with ibuprofen, which was shown in a randomized trial to be more effective than Tylenol in treating the pain of ear infection.

I saw a blurb in a parenting magazine about "EarPlanes," ear plugs designed for kids to wear on flights. The problem is, there's no evidence that they work. In one study, each volunteer was given pressure-equalizing earplugs in one ear, and a placebo earplug in the other. The pressure-equalizing earplugs were useless: 75% experienced ear pain on descent. So save the earplugs for yourself, so you won't have to hear the little tyke yowling.

Should I slip my kid a mickey? Some of you will no doubt have trouble with the idea of sedating a child for your own comfort. I don't have a moral objection, but I do have an evidence-based one: It doesn't work, at least with any over-the-counter medications. Diphenhydramine, which is Benadryl, is the most studied OTC sedative. Although there are no studies of pediatric in-flight sedation, we can extrapolate from the TIRED* study. The exhausted parents of 44 infants with frequent night-time awakenings drugged their progeny with either diphenhydramine or placebo. Almost no child (and by extension, no parent) was reported to have improved sleep by the end of the trial. In addition, Benadryl can cause paradoxical excitation in children -- the last thing you need when they're already giving the passenger in front of them a back massage with their feet.

Bottom line: There's not much you can do to make the flight more comfortable for you, your baby or your fellow passengers, other than the time-tested methods of feeding, holding, and walking him up and down the aisles.

Here's hoping we aren't on the same flight.

*Trial of Infant Response to Diphenhydramine. They kind of had to work for that one.

Monday, November 14, 2011

The Picky Eater

My parents are preparing a traditional Thanksgiving dinner for the extended family this year. They're excited to have all their grandkids at the table, but also a bit nervous, as they're venturing far outside their Chinese food comfort zone. I've warned them, though, not to feel hurt if my 6-year-old daughter eats nothing but mashed potatoes.

That's right, despite our vibrant heritage of Chinese-American gluttony dating back to the Ching dynasty, my family has been cursed with a picky eater.

There is no universally accepted definition of what makes a fussy eater, but most parents say they know it when they see it. Nutritionists and psychologists distinguish between two related conditions. Food neophobia is defined as the fear of trying new foods. It's thought to be an evolutionary vestige from our Neanderthal days, when eating something new, particularly a plant, carried a risk of poisoning or illness. Food neophobes will often reject a new food based on sight or odor alone, refusing to even taste it. Neophobia starts at age 2, when the child becomes more mobile and hence, less supervised, and usually ends at around 6 years of age. Picky eating, on the other hand, is the refusal to eat even familiar foods. Picky eaters may be more willing to taste new foods, but will regularly eat only a narrow range of items. Picky eaters gravitate towards carbohydrates and away from vegetables and protein sources. Unlike food neophobia, many picky eaters do not outgrow this tendency. In reality, there is a great deal of overlap between food neophobia and picky eating, and many kids have both.

Up to half of parents claim to have a picky eater in their family. The health consequences of picky eating aren't clear. Some studies have shown a lower body mass index in picky eaters, while others have found the opposite. Picky eaters tend to take in fewer nutrients and vitamins, though frank deficiency is rare. And one study, which followed children over an average of 11 years, found that picky eaters have more symptoms of anorexia in later adolescence.

Scientists aren't even sure of what causes some kids to be picky eaters. Twin studies suggest that about two-thirds of picky eating is genetic, rather than environmental. Part of this may be due to a known inherited variation in the ability to taste bitterness in vegetables; those with higher sensitivity may be more likely to avoid veggies. Picky eating may also associated with higher rates of anxiety. Regardless of the underlying cause, meal times can often deteriorate into a battle for control, further exacerbating the pickiness.

My daughter Sarah's all-time record was being forced to sit two hours at the dinner table with an unwanted pork chop in her mouth. I finally gave up when she started nodding off. If she was going to choke on something in her sleep, I wanted it to at least be a vegetable. Otherwise, what would the neighbors say?

There are studies examining ways to diversify the picky eater's diet. The French, naturally, are on the cutting edge of gastronomical research.* In schools in Dijon, half of 9-year-olds were assigned to a weekly 90-minute program to train their tender young palates. The sessions included lectures, cooking workshops, and a field trip to a restaurant (though contrary to stereotype, no wine tastings). The children were surveyed before and after the program, and presented with unusual items, such as leek sprouts and dried anchovies, to taste. Kids enrolled in the program were slightly more likely to sample the offerings. Tant pis, ten months after the program ended, the reduction in food neophobia disappeared and returned to baseline.

Of course, this study is unlikely to be of much help to those of us in the States, where schools are dealing with budget crunches by dropping frivolous subjects, like long division. So what's a beleaguered parent to do? Here are some tips:

Munch on a carrot while breastfeeding your baby. (Or better yet, drink a Bloody Mary -- read my post on drinking while nursing.) Breastfeeding is associated with lower rates of picky eating. It's been well-established that many flavors from a mother's diet are transferred to her breast milk -- which may explain, for instance, why Indian babies have no problems tolerating curry. There's weak evidence that consuming carrot juice while nursing increases an infant's acceptance of carrot puree.** Although there are few studies on this topic, there are plenty of other good reasons to nurse.

Don't reward your children for eating healthy food. Multiple studies have found that if you reward a child for eating something, she will eat more of it in the short term, but she'll end up disliking and eating less of it in the future -- the thought being, "If Mom has to give me a prize to eat this green bean, it must taste terrible." Even verbal praise for consuming a particular food reduces a child's liking for it.

Present healthy food as a reward. Rewarding behavior with food increases the desirability of that food. Note that this trick works best with the very young. One researcher who tried the old "eat your dessert, then you may eat your vegetables" ruse was unable to fool a single 4-year-old.

Expose your child to the food you want him to eat. Then do it again. And again. And again. In fact, studies show you must present new food to a child a minimum of 10 times for him to finally accept it. One randomized trial had parents in the experimental arm present an unpopular vegetable (most often a bell pepper) to their child every day for 14 days. The parents were to encourage their child to taste it, but not to offer any reward for eating it. At the end of the two weeks, kids in the exposure group increased their liking and consumption of the vegetable, compared to no change in the control group. The problem? Many of the parents weren't able to stick it out for the full 14 days, and when they included these kids in the analysis, there was no benefit.

Set a good example for your kids, and eat your veggies. This won't be a surprise to anyone, but several studies have found that vegetable consumption in kids closely mirrors that of their parents. Hard to know if this is genetic or environmental, or if there's a cause and effect relationship, but it can't hurt.

If all else fails, talk to your pediatrician -- preferably someone as non-judgmental as my kids' doctor. I became really concerned when Sarah weighed one pound less at her 4-year-old visit than she did at her 3-year-old one. I bemoaned her tuber-based diet, and asked for suggestions on how I could get her to eat her veggies. Her pediatrician's answer? "Does she eat ketchup with her French fries? Ketchup counts."

Ketchup counts? I've got Thanksgiving covered.

*Public schools in France serve a five-course meal to their students, and no, it's not like in America, where the hors d'oeurves consist primarily of pomme frites.

**OK, I realize that you could use the same logic to argue that drinking while nursing could give your baby a taste for Jack Daniels. If you're a breastfeeding mom, just pretend that you never read this footnote.

That's right, despite our vibrant heritage of Chinese-American gluttony dating back to the Ching dynasty, my family has been cursed with a picky eater.

In-n-Out started her down the road to perdition.

There is no universally accepted definition of what makes a fussy eater, but most parents say they know it when they see it. Nutritionists and psychologists distinguish between two related conditions. Food neophobia is defined as the fear of trying new foods. It's thought to be an evolutionary vestige from our Neanderthal days, when eating something new, particularly a plant, carried a risk of poisoning or illness. Food neophobes will often reject a new food based on sight or odor alone, refusing to even taste it. Neophobia starts at age 2, when the child becomes more mobile and hence, less supervised, and usually ends at around 6 years of age. Picky eating, on the other hand, is the refusal to eat even familiar foods. Picky eaters may be more willing to taste new foods, but will regularly eat only a narrow range of items. Picky eaters gravitate towards carbohydrates and away from vegetables and protein sources. Unlike food neophobia, many picky eaters do not outgrow this tendency. In reality, there is a great deal of overlap between food neophobia and picky eating, and many kids have both.

Up to half of parents claim to have a picky eater in their family. The health consequences of picky eating aren't clear. Some studies have shown a lower body mass index in picky eaters, while others have found the opposite. Picky eaters tend to take in fewer nutrients and vitamins, though frank deficiency is rare. And one study, which followed children over an average of 11 years, found that picky eaters have more symptoms of anorexia in later adolescence.

Scientists aren't even sure of what causes some kids to be picky eaters. Twin studies suggest that about two-thirds of picky eating is genetic, rather than environmental. Part of this may be due to a known inherited variation in the ability to taste bitterness in vegetables; those with higher sensitivity may be more likely to avoid veggies. Picky eating may also associated with higher rates of anxiety. Regardless of the underlying cause, meal times can often deteriorate into a battle for control, further exacerbating the pickiness.

My daughter Sarah's all-time record was being forced to sit two hours at the dinner table with an unwanted pork chop in her mouth. I finally gave up when she started nodding off. If she was going to choke on something in her sleep, I wanted it to at least be a vegetable. Otherwise, what would the neighbors say?

There are studies examining ways to diversify the picky eater's diet. The French, naturally, are on the cutting edge of gastronomical research.* In schools in Dijon, half of 9-year-olds were assigned to a weekly 90-minute program to train their tender young palates. The sessions included lectures, cooking workshops, and a field trip to a restaurant (though contrary to stereotype, no wine tastings). The children were surveyed before and after the program, and presented with unusual items, such as leek sprouts and dried anchovies, to taste. Kids enrolled in the program were slightly more likely to sample the offerings. Tant pis, ten months after the program ended, the reduction in food neophobia disappeared and returned to baseline.

Of course, this study is unlikely to be of much help to those of us in the States, where schools are dealing with budget crunches by dropping frivolous subjects, like long division. So what's a beleaguered parent to do? Here are some tips:

Munch on a carrot while breastfeeding your baby. (Or better yet, drink a Bloody Mary -- read my post on drinking while nursing.) Breastfeeding is associated with lower rates of picky eating. It's been well-established that many flavors from a mother's diet are transferred to her breast milk -- which may explain, for instance, why Indian babies have no problems tolerating curry. There's weak evidence that consuming carrot juice while nursing increases an infant's acceptance of carrot puree.** Although there are few studies on this topic, there are plenty of other good reasons to nurse.

Don't reward your children for eating healthy food. Multiple studies have found that if you reward a child for eating something, she will eat more of it in the short term, but she'll end up disliking and eating less of it in the future -- the thought being, "If Mom has to give me a prize to eat this green bean, it must taste terrible." Even verbal praise for consuming a particular food reduces a child's liking for it.

Present healthy food as a reward. Rewarding behavior with food increases the desirability of that food. Note that this trick works best with the very young. One researcher who tried the old "eat your dessert, then you may eat your vegetables" ruse was unable to fool a single 4-year-old.

Expose your child to the food you want him to eat. Then do it again. And again. And again. In fact, studies show you must present new food to a child a minimum of 10 times for him to finally accept it. One randomized trial had parents in the experimental arm present an unpopular vegetable (most often a bell pepper) to their child every day for 14 days. The parents were to encourage their child to taste it, but not to offer any reward for eating it. At the end of the two weeks, kids in the exposure group increased their liking and consumption of the vegetable, compared to no change in the control group. The problem? Many of the parents weren't able to stick it out for the full 14 days, and when they included these kids in the analysis, there was no benefit.

Set a good example for your kids, and eat your veggies. This won't be a surprise to anyone, but several studies have found that vegetable consumption in kids closely mirrors that of their parents. Hard to know if this is genetic or environmental, or if there's a cause and effect relationship, but it can't hurt.

If all else fails, talk to your pediatrician -- preferably someone as non-judgmental as my kids' doctor. I became really concerned when Sarah weighed one pound less at her 4-year-old visit than she did at her 3-year-old one. I bemoaned her tuber-based diet, and asked for suggestions on how I could get her to eat her veggies. Her pediatrician's answer? "Does she eat ketchup with her French fries? Ketchup counts."

Ketchup counts? I've got Thanksgiving covered.

*Public schools in France serve a five-course meal to their students, and no, it's not like in America, where the hors d'oeurves consist primarily of pomme frites.

**OK, I realize that you could use the same logic to argue that drinking while nursing could give your baby a taste for Jack Daniels. If you're a breastfeeding mom, just pretend that you never read this footnote.

Tuesday, November 8, 2011

The 5-Second Rule

The other day, JoJo chucked his binky in one of his typical fits of pique. Normally, I follow the 5-second rule, scoop it off the floor and plop it back into his mouth (in order to terminate his fit of pique, of course). This time, though, his aim was true:*

After I plucked it out, Rick suggested running it through the dishwasher, but I knew that I could never give that pacifier to my son without making myself queasy. I threw it out, which meant that JoJo's fit of pique matured into a full-blown tantrum.

Afterwards, I wondered whether my husband's blase attitude about "eau de toilette," or my laissez-faire one about food and binkies hitting the floor, could be justified by any data. My go-to source for health information, Yo Gabba Gabba, seems to contradict my practice:

Brobee picks up his Melba toast in a scant 3 seconds, but already it's swarming with tiny, ugly germs. The little monster learns that germs can make him sick, but sadly, not that Melba toast makes for a terribly tasteless snack.

Since YGG didn't include any references in its credits, I did a literature search, and there was indeed a published study on the "5-second rule." The microbiologists gleefully painted floor tiles, wood and carpet with Salmonella typhi, the agent of typhoid fever, and then dropped bologna and bread on these surfaces for 5, 30 and 60 seconds. They then made some poor undergraduate eat the samples and observed him for signs of illness. Kidding! They probably couldn't get that experiment past an institutional review board. No, they simply cultured the food afterwards, and found that there was almost no difference in the bacterial contamination rates among the 5-, 30- and 60-second groups. They did find that the colony counts were 10 to 100 times lower on the food that fell on the carpet, so think twice before yelling at your kids for snacking on the expensive Oriental rug.

Of course, most households aren't teeming with typhoid fever. So how dirty are your floors? The vaguely sinister Journal of Hygiene published a study of microbial contamination in over 200 homes in Surrey, England. Investigators cultured over 60 sites in the bathroom, kitchen and living room.** Bacteria was found on most surfaces, though the majority of isolates were not pathogens. However, E. coli, which can make you sick if ingested, was found in two-thirds of all households. In general, dry surfaces were rarely contaminated: kitchen and bathroom floors grew E. coli only 3-5% of the time. Toilet water, as you might expect, had E. coli 16% of the time, though at surprisingly low colony counts. The worst area? The kitchen sink, which grew E. coli 19% of the time, with much higher colony counts than toilet water. Dishcloths and drainers were almost as bad.

So what do I make of this data? I think you can safely say that the 5-second rule has been debunked. Fortunately, it turns out that the average household floor isn't that dirty, which means that the rule can be extended to 60 seconds! I usually throw JoJo's binkies into the kitchen sink to wash, but I've learned that reusing his toilet-tainted pacifier would have been less likely to make him sick.

If only I could get past the ick factor.

*True story, but the photo is a re-enactment. I thought about taking a photo when it really happened, but let's just say the bowl was, er, not clean. Like all the other moms I know, I bring my toddler into the bathroom with me so he's not left screaming outside the door. Don't worry, I threw away the second binky too.

**The participants were recruited from "ladies' social clubs," so you could argue that maybe the ladies were scrubbing down the house before the arrival of the research team. The scientists thought ahead and paid repeat, surprise visits and found no significant difference in their culture results.

After I plucked it out, Rick suggested running it through the dishwasher, but I knew that I could never give that pacifier to my son without making myself queasy. I threw it out, which meant that JoJo's fit of pique matured into a full-blown tantrum.

Afterwards, I wondered whether my husband's blase attitude about "eau de toilette," or my laissez-faire one about food and binkies hitting the floor, could be justified by any data. My go-to source for health information, Yo Gabba Gabba, seems to contradict my practice:

Brobee picks up his Melba toast in a scant 3 seconds, but already it's swarming with tiny, ugly germs. The little monster learns that germs can make him sick, but sadly, not that Melba toast makes for a terribly tasteless snack.

Since YGG didn't include any references in its credits, I did a literature search, and there was indeed a published study on the "5-second rule." The microbiologists gleefully painted floor tiles, wood and carpet with Salmonella typhi, the agent of typhoid fever, and then dropped bologna and bread on these surfaces for 5, 30 and 60 seconds. They then made some poor undergraduate eat the samples and observed him for signs of illness. Kidding! They probably couldn't get that experiment past an institutional review board. No, they simply cultured the food afterwards, and found that there was almost no difference in the bacterial contamination rates among the 5-, 30- and 60-second groups. They did find that the colony counts were 10 to 100 times lower on the food that fell on the carpet, so think twice before yelling at your kids for snacking on the expensive Oriental rug.

Of course, most households aren't teeming with typhoid fever. So how dirty are your floors? The vaguely sinister Journal of Hygiene published a study of microbial contamination in over 200 homes in Surrey, England. Investigators cultured over 60 sites in the bathroom, kitchen and living room.** Bacteria was found on most surfaces, though the majority of isolates were not pathogens. However, E. coli, which can make you sick if ingested, was found in two-thirds of all households. In general, dry surfaces were rarely contaminated: kitchen and bathroom floors grew E. coli only 3-5% of the time. Toilet water, as you might expect, had E. coli 16% of the time, though at surprisingly low colony counts. The worst area? The kitchen sink, which grew E. coli 19% of the time, with much higher colony counts than toilet water. Dishcloths and drainers were almost as bad.

So what do I make of this data? I think you can safely say that the 5-second rule has been debunked. Fortunately, it turns out that the average household floor isn't that dirty, which means that the rule can be extended to 60 seconds! I usually throw JoJo's binkies into the kitchen sink to wash, but I've learned that reusing his toilet-tainted pacifier would have been less likely to make him sick.

If only I could get past the ick factor.

*True story, but the photo is a re-enactment. I thought about taking a photo when it really happened, but let's just say the bowl was, er, not clean. Like all the other moms I know, I bring my toddler into the bathroom with me so he's not left screaming outside the door. Don't worry, I threw away the second binky too.

**The participants were recruited from "ladies' social clubs," so you could argue that maybe the ladies were scrubbing down the house before the arrival of the research team. The scientists thought ahead and paid repeat, surprise visits and found no significant difference in their culture results.

Wednesday, November 2, 2011

The Kindest Cut, Part 2

Looks like I chose a touchy subject for my last blog post; there appear to be quite a few men mourning the loss of their infantile foreskin. Let me summarize some of the arguments made against my opinion on male circumcision, with my responses:

1. You cherry-picked studies showing a benefit for male circumcision. It's true that while numerous observational studies have shown a benefit in terms of UTIs and STDs, there are some studies finding no effect, or even an opposite effect. Non-experimental, observational studies are fundamentally flawed for this reason. That's why it's so important to look at randomized, controlled trials whenever possible.

2. The risk of UTIs in male infants is low and does not justify circumcision. I totally agree. The reduction in UTIs alone is not large or clinically important enough to advocate for this procedure.

3. The trials in Africa are flawed because they weren't double-blinded, and they were stopped early. OK, YOU design a study that does sham circumcision in the control group, and try to get that past an ethics committee. Stopping a trial early because of a significant benefit in the treatment group (and offering it to the control group) is the most ethical thing to do in this situation, since HIV is a life-threatening disease. It is true that stopping a study early for this reason tends to overestimate the benefit, and I might be suspicious of the results if they were seen in only one trial, but in fact, the benefits were seen in all three studies, in different parts of Africa. The Cochrane Group, which is extremely conservative in its recommendations, concluded, "Research on the effectiveness of male circumcision for preventing HIV acquisition in heterosexual men is complete. No further trials are required to establish this fact."

4. The trials in adult heterosexual African men don't apply to infants in the developed world. The majority of HIV infections in the U.S. and worldwide are due to unprotected sex. Sure, the absolute reduction in HIV infection with circumcision will be lower in the U.S. than in some parts of Africa, but relative risk reductions tend to remain constant over various patient populations. I do agree that if you're in a part of the world with extremely low rates of HIV infection (such as Australia -- which has a 0.004% annual risk of infection), routine circumcision may not make economic sense.

5. Why not promote safe sex instead? I'm not saying circumcision should be done instead of teaching safe sex. HIV prevention needs to be multi-pronged, and must also include education, free condom distribution, low-cost antiviral treatment (which reduces transmission rates) and needle exchanges.

6. Infants die from circumcision, and parents shouldn't be making this decision for them. Yes, babies will rarely die from circumcision, just as people will rarely die from having IVs inserted into their hands or having a severe allergic reaction to antibiotics (both of which I have seen). But AIDS is still a huge killer, even in developed countries. As for parents who want to let their sons make the decision about circumcision once they come of age, I think that's fine. Just realize that adult male circumcision is a bigger procedure, often involving general anesthesia, and may not be covered by insurance plans when done for purely preventive reasons.

7. Your story about Dr. Nick operating on your kid sounds fishy. Nope, absolutely true. I got a list of low-cost providers because I gave birth at my own, public county hospital.

8. You're a terrible mom. OK, I will concede that in the moment that I let Dr. Nick circumcise my son, I was a terrible mom. I'm an imperfect parent, which is why I think a lot of people read my blog. If I had to do it all over again, I would still have my son circumcised, but I'd go with this guy instead:

9. The foreskin is a part of normal male anatomy, and removing it is mutilation. It occurred to me that this argument probably should have been #1, as many of you have a philosophical objection to circumcision. You think it's wrong to remove normal foreskin for any reason, and I don't. There's nothing we can say that will change each other's minds on this point.

And so we have a parting of the ways.

*It's Krusty the clown's dad, Rabbi Hyman Krustofski.

1. You cherry-picked studies showing a benefit for male circumcision. It's true that while numerous observational studies have shown a benefit in terms of UTIs and STDs, there are some studies finding no effect, or even an opposite effect. Non-experimental, observational studies are fundamentally flawed for this reason. That's why it's so important to look at randomized, controlled trials whenever possible.

2. The risk of UTIs in male infants is low and does not justify circumcision. I totally agree. The reduction in UTIs alone is not large or clinically important enough to advocate for this procedure.

3. The trials in Africa are flawed because they weren't double-blinded, and they were stopped early. OK, YOU design a study that does sham circumcision in the control group, and try to get that past an ethics committee. Stopping a trial early because of a significant benefit in the treatment group (and offering it to the control group) is the most ethical thing to do in this situation, since HIV is a life-threatening disease. It is true that stopping a study early for this reason tends to overestimate the benefit, and I might be suspicious of the results if they were seen in only one trial, but in fact, the benefits were seen in all three studies, in different parts of Africa. The Cochrane Group, which is extremely conservative in its recommendations, concluded, "Research on the effectiveness of male circumcision for preventing HIV acquisition in heterosexual men is complete. No further trials are required to establish this fact."

4. The trials in adult heterosexual African men don't apply to infants in the developed world. The majority of HIV infections in the U.S. and worldwide are due to unprotected sex. Sure, the absolute reduction in HIV infection with circumcision will be lower in the U.S. than in some parts of Africa, but relative risk reductions tend to remain constant over various patient populations. I do agree that if you're in a part of the world with extremely low rates of HIV infection (such as Australia -- which has a 0.004% annual risk of infection), routine circumcision may not make economic sense.

5. Why not promote safe sex instead? I'm not saying circumcision should be done instead of teaching safe sex. HIV prevention needs to be multi-pronged, and must also include education, free condom distribution, low-cost antiviral treatment (which reduces transmission rates) and needle exchanges.

6. Infants die from circumcision, and parents shouldn't be making this decision for them. Yes, babies will rarely die from circumcision, just as people will rarely die from having IVs inserted into their hands or having a severe allergic reaction to antibiotics (both of which I have seen). But AIDS is still a huge killer, even in developed countries. As for parents who want to let their sons make the decision about circumcision once they come of age, I think that's fine. Just realize that adult male circumcision is a bigger procedure, often involving general anesthesia, and may not be covered by insurance plans when done for purely preventive reasons.

7. Your story about Dr. Nick operating on your kid sounds fishy. Nope, absolutely true. I got a list of low-cost providers because I gave birth at my own, public county hospital.

8. You're a terrible mom. OK, I will concede that in the moment that I let Dr. Nick circumcise my son, I was a terrible mom. I'm an imperfect parent, which is why I think a lot of people read my blog. If I had to do it all over again, I would still have my son circumcised, but I'd go with this guy instead:

Extra credit if you can name this Simpsons character*

9. The foreskin is a part of normal male anatomy, and removing it is mutilation. It occurred to me that this argument probably should have been #1, as many of you have a philosophical objection to circumcision. You think it's wrong to remove normal foreskin for any reason, and I don't. There's nothing we can say that will change each other's minds on this point.

And so we have a parting of the ways.

*It's Krusty the clown's dad, Rabbi Hyman Krustofski.

Tuesday, November 1, 2011

The Kindest Cut?

When J.J. was born, our hospital gave us a list of outside physicians who performed circumcisions. My husband called every provider on the list and made an appointment with the second cheapest one -- the same process by which he selects a bottle from a restaurant wine list. I knew we were in trouble when we were greeted by none other than....